|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

In the last years, the research interest in visual navigation towards objects in indoor environments has grown significantly. This growth can be attributed to the recent availability of large navigation datasets in photo-realistic simulated environments, like Gibson and Matterport3D. However, the navigation tasks supported by these datasets are often restricted to the objects present in the environment at acquisition time. Also, they fail to account for the realistic scenario in which the target object is a user-specific instance that can be easily confused with similar objects and may be found in multiple locations within the environment. To address these limitations, we propose a new task denominated Personalized Instance-based Navigation (PIN), in which an embodied agent is tasked with locating and reaching a specific personal object by distinguishing it among multiple instances of the same category. The task is accompanied by PInNED 📌, a dedicated new dataset composed of photo-realistic scenes augmented with additional 3D objects. In each episode, the target object is presented to the agent using two modalities: a set of visual reference images on a neutral background and manually annotated textual descriptions. Through comprehensive evaluations and analyses, we showcase the challenges of the PIN task as well as the performance and shortcomings of currently available methods designed for object-driven navigation, considering modular and end-to-end agents.

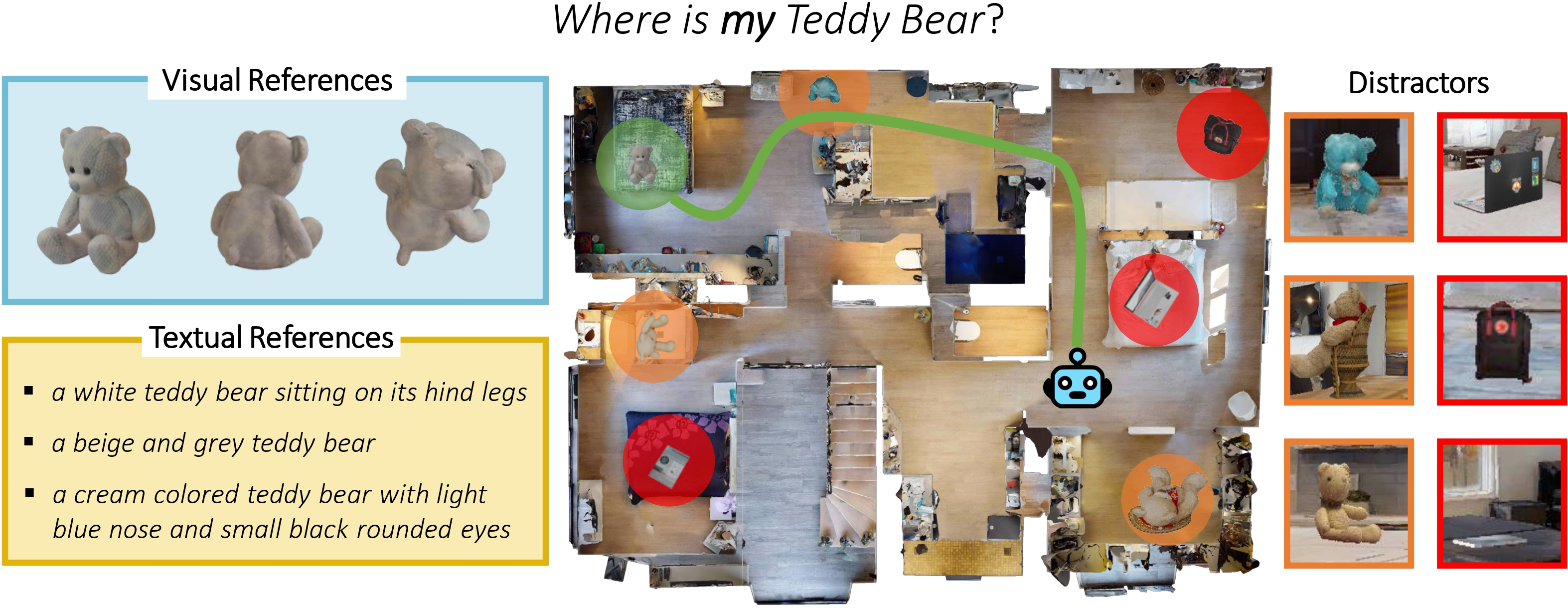

We present a novel task denominated Personalized Instance-based Navigation (PIN), in which an embodied agent is tasked with locating and reaching a personal object by distinguishing it among multiple distractors.

(i.e., other objects of the same category as the target or of other categories). The target object, same category distractors, and other distractors are circled, respectively, in green, orange, and red.

The total number of available objects in the dataset is 338, corresponding to different instances of 18 object categories.

We present a novel task denominated Personalized Instance-based Navigation (PIN), in which an embodied agent is tasked with locating and reaching a personal object by distinguishing it among multiple distractors.

(i.e., other objects of the same category as the target or of other categories). The target object, same category distractors, and other distractors are circled, respectively, in green, orange, and red.

The total number of available objects in the dataset is 338, corresponding to different instances of 18 object categories.

|

|

|

a yellow kanken backpack with yellow straps on the top

a yellow monochrome kanken backpack

a photo of a yellow backpack with a strap and red circle on the front

a pair of black squared eyeglasses with a golden plate on the arms

a pair of sunglasses with a black frame and gold detail

a pair of black thick eyeglasses with squared frame and golden hinges

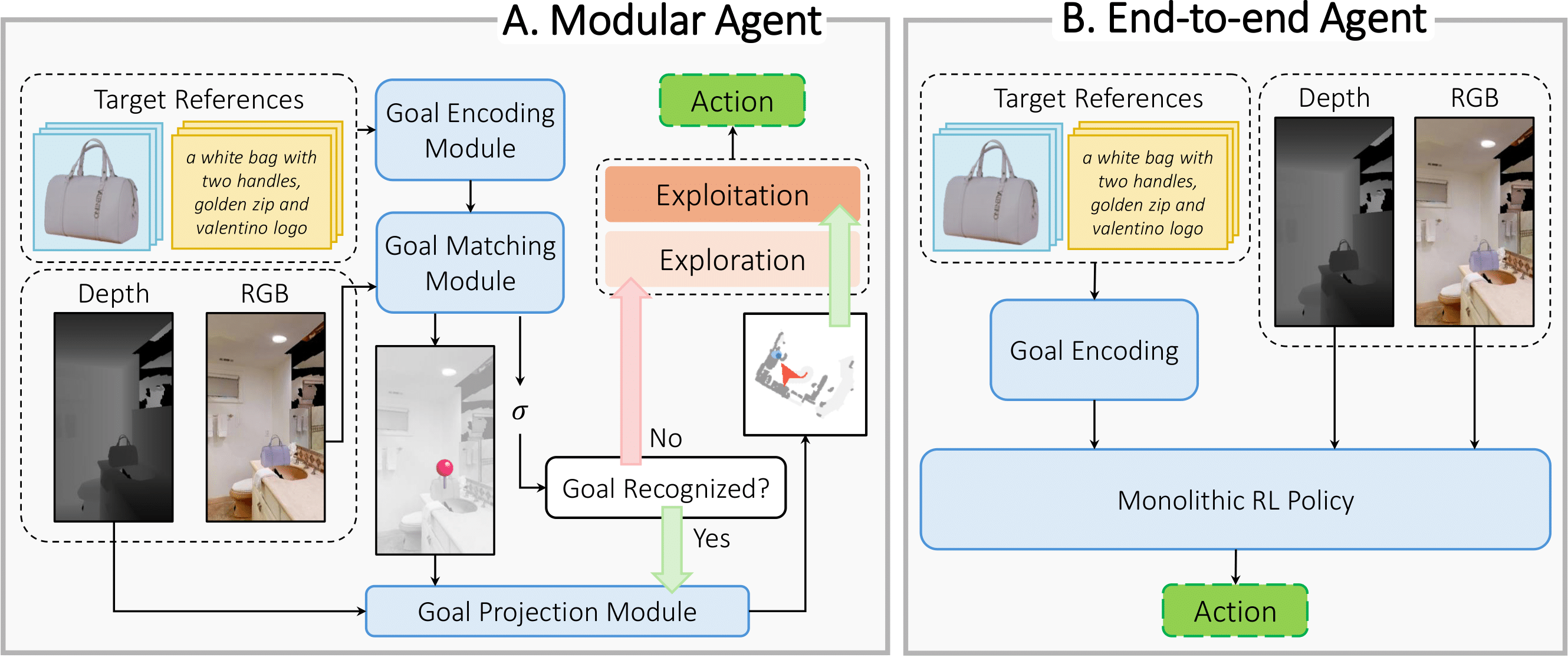

We grouped the evaluated agent baselines into two categories:

A. Modular: Decouple the navigation task into specialized sub-modules.

B. End-to-end: A monolithic policy is trained using reinforcement learning.

We grouped the evaluated agent baselines into two categories:

A. Modular: Decouple the navigation task into specialized sub-modules.

B. End-to-end: A monolithic policy is trained using reinforcement learning.

@inproceedings{barsellotti2024personalized

title={Personalized Instance-based Navigation Toward User-Specific Objects in Realistic Environments},

author={Barsellotti, Luca and Bigazzi, Roberto and Cornia, Marcella and Baraldi, Lorenzo and Cucchiara, Rita},

booktitle={Advances in Neural Information Processing Systems},

year={2024}

}